A couple of years ago, we decided we had to wind down our collection of U.S. Congressional documents. Not only do we have no room for any more, we have no room for what we have. Our having too much problem has led to our digitization program, which I will have much about later. Moving forward, however, we still want to maintain a collection of congressional documents, even without the shelf-space. The solution is to download from the GPO and add them to our growing collection of digitized documents.

On one level, the idea for doing this is really simple: they are available on the GPO websites, just download and add them to the repository. I know, why not just wait until law.gov makes everything available in bulk, or until the GPO makes bulk electronic downloads part of the new depository system. If and when that happens, we will be on board. In the meantime, we would like to have a complete a collection of legislative history materials as possible. Without more paper.

As states, the basis idea is really simple: download the documents. Of course, dealing with thousands of documents in a programmatic manner makes it more of a challenge.

Downloading:

The technique we use for this is a slightly more than basic screen-scrape harvesting. But not by much. The theory was to use a PERL script which essentially would perform a search of the FedSys collection, and download the links to material that are in the search results page. This can be done, provided that there are predictable ways in which FedSys presents material, which fortunately, seems to be the case.

As it turns out, the approach with the FedSys material does not involve a search as such, but rather a structured drill-down into the menued browsing options presented in the system. In practice, the programming is similar to canning a search, but is more predictable. It also requires a little more looping in the program.

For reasons that probably have more to do with the way I think than anything else, I found that the easiest way to approach programming a drill-down to documents was by using the congressional committee browse page. It’s the page that is found here: http://www.gpo.gov/fdsys/browse/committeetab.action. The page looks like this:

The nice thing about this page is that we can start drilling down without dealing with javascript or anything else. At least at the start. From here, we can parse out the links for the individual committees. These have very regular structures, as with the Senate Finance Committee: http://www.gpo.gov/fdsys/browse/committee.action?chamber=senate&committee=finance. Of course, we could just program an array of committees of the House and Senate to make things faster, but by relying on this page, we can rely on the GPO to keep the listings of committees up to date. In addition, should the GPO alter their URL/directory structure, one small change in a regular expression will fix the whole thing. Much less work in the long run, and very little added burden to GPO.

What is actually going on in the PERL script at this point is that the wget utility is being opened as a file handle, with the download, when invoked, being sent to STDOUT. The download is started and read as a WHILE loop, which looks for the following pattern:

/http:\/\/www\.gpo\.gov\/fdsys\/browse\/committee\.action\?chamber=(\w)+\&committee=(.*?)\”>/ , where $1 is the chamber and $2 is the committee.

At this point, we have the information we need to take some shortcuts. The link we have grabbed will generate a page with Ajax code. That code will allow expansion of some categories, first by document type (hearings, prints, reports), and then by Congress. The thing to do is to add that information, so we can get to document links. The PERL line is this:

open(GETLIST, “wget \”http://www.gpo.gov/fdsys/browse/committeecong.action?collection= $collec&chamber=$chamb&committee=$comtee&congressplus

=$congno&ycord=0\” -q -O -|”); # all in one line, of course.

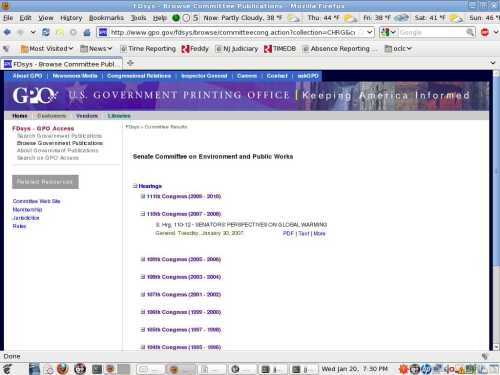

Where $collec is the document type (CHRG for hearings, CRPT for reports, or CPRT for prints), $chamb is the previously grabbed House or Senate, $comtee is the previously grabbed committee string, and $congno is the congress for which you want to gather material. Depending on what and how much you want, additional nested loops would be used to cycle through document types and Congresses. The page that will be opened and parsed actually appears like this:

As the above filehandle is read through it’s own while loop, the PDF, Text, and More links can be identified with a regular expression. In our case, what we really want is the “More” link. It creates a little more work, but is very worth it.

This “More” link does not download a document, but links to one last page. This page will contain a link to a ZIP file which contains both text and PDF versions of the document, as well as PREMIS and MODS metadata files. So, we grab the link to the “ more” page, download it to STDOUT like all the previous, and actually save the ZIP file with this:

system(“wget -O $filen -w 3 -nc –random-wait

\”http://www.gpo.gov/fdsys/delivery/getpackage.action?$zip\””);

where $zip is the file name of the ZIP file, and $filen is the filename that we want to save the file as.

A word on politeness to the GPO: most of those who would be inclined to actually do what I’m writing about here already know this, but in order for this sort of thing to work, it must be done in a manner that will not bring down the GPO servers. We’re not doing this to be mean, right? So, the “-w 3 -nc –r andom-wait” switches in that last wget call are very important. The -w 3 and -random-wait insure that the program will wait an average of 3 seconds before downloading. This slows things down, but relieves the potential load that a program like this might put on the remote server. In the case of the previous pages, this is not necessary because they are rather small XHTML files, and are only read once. The ZIP files are often in the megabytes, and, if you are looping throught Congresses and document types, there are thousands of them.

By all means, get them all for your own repository. But be kind.

Next Post: What to do with all these files once you have them.

Pingback: Joergensen on Embedded Metadata & Harvesting Congressional Documents « Legal Informatics Blog

John: Great post. FYI, I just saw that Maarten Marx has recently posted a similar set of instructions for harvesting & marking up European Parliamentary documents, at PoliticalMashup: http://j.mp/dqwyFd . May be of interest.